Nvidia Corp. and OpenAI on Monday unveiled what they termed “the biggest AI infrastructure deployment in history,” in a sweeping agreement aimed at building out unprecedented computing scale to power future artificial intelligence models.

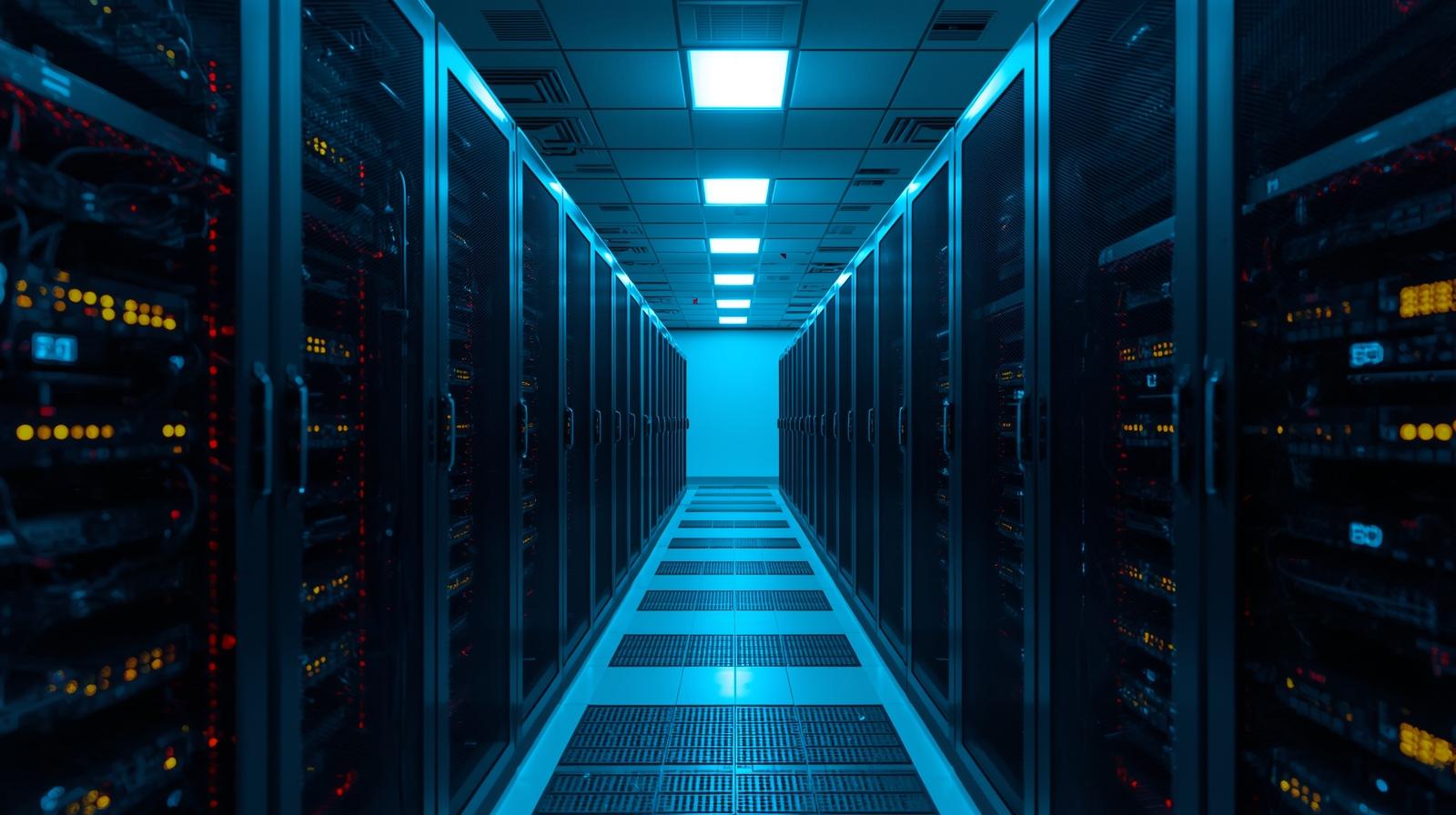

The two companies signed a Letter of Intent (LoI) under which Nvidia will supply and co-deploy at least 10 gigawatts of AI computing capacity for OpenAI, deploying millions of Nvidia systems in data centres. To support the build-out of this infrastructure—including data centre facilities, power, and networking—Nvidia intends to invest up to US$100 billion in OpenAI, phased in as each gigawatt of capacity is brought online.

Under the deal:

- The first phase, involving deployment of the initial gigawatt of Nvidia’s “Vera Rubin” platform, is expected to come online in the second half of 2026.

- OpenAI and Nvidia will work together to align their hardware, software and infrastructure road maps; Nvidia will be OpenAI’s preferred compute and networking partner for its growing “AI factory” infrastructure.

OpenAI CEO Sam Altman said “everything starts with compute,” emphasizing that without vast scale, the next frontier of AI—“agentic reasoning” and large-context models—would not be possible. Nvidia CEO Jensen Huang said the partnership represents the “next leap forward” from the companies’ earlier work, including deploying their first DGX systems together.

According to Reuters, Nvidia shares rose as much as 4.4%, reaching record intraday highs following the announcement. Oracle, which is also part of OpenAI’s broader infrastructure partnerships under the Stargate program, also saw stock gains.

Analysis/Implications

The agreement solidifies Nvidia’s position as a dominant supplier of AI hardware. OpenAI, which has also been investing in its own chips and working with other cloud providers, may be seeking greater control over latency, cost, and supply chain risks.

However, some analysts warn that such deep coupling may raise competition and regulatory concerns. Questions have been raised about whether OpenAI’s use of Nvidia systems, with Nvidia providing both hardware and investment, could give Nvidia outsized influence over OpenAI’s development, or whether it could squeeze out competitors in chips or models.

Also, deploying 10 gigawatts of computing power represents a very large demand on power infrastructure and logistics. Ensuring availability of reliable energy, cooling, and location-permitting will be a major engineering, regulatory and environmental challenge.

What’s Next

The two companies expect to finalize the full agreement in “the coming weeks.” They aim to roll out the first gigawatt in the latter half of 2026, with further capacity coming after that under the investment schedule.

In addition, OpenAI will continue with its other compute initiatives—including its own chip development efforts—while managing the increasing demand for inference (model serving) and training workloads.